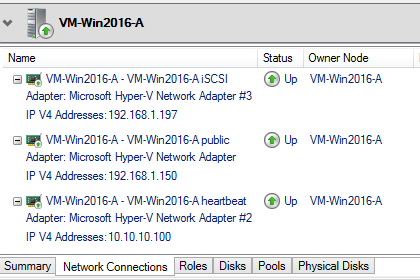

It was a side-by-side upgrade. On the new VM SQL Server 2016 SP1+CU5 was installed first. The database engine and report service are components installed. ReportServer database was created locally. Encryption key was backed up. There're a few options to get the upgrade done. I did try two of them.

Option # 1. Restore ReportSserver database

Restoring ReportServer database sounds the simplest way of restoring all report server contents in a quick way. Before you do this backup the reportserver db on both source and target servers. Also generate and backup the encryption keys on both servers. Restoring the database is straight forward. Getting the new Report Service up and running is also a breeze. Restoring the original encryption key is also successful. But the web portal wouldn't function and displayed an error message:

The version of the report server database is either in a format that is not valid, or it cannot be read. The found version is '143'. The expected version is '175'. (rsinvalidReportServerDatabase)

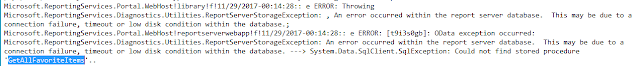

So I messed around with two tables in the ReportServer database - ServerUpgradeHistory and DBUpgradeHistory. The former shows one record with ServerVersion = 143. The latter contains a list of upgrade from DbVersion 143 all the way up to 175. Either updating the values from 143 to 175 or adding a second value of 143 didn't help. ReportServerService log looked ok. It's the ReportingServices.Portal.WebHost log that showed a weird error:

I did a search for object "GetAllFavoriteItems" in all SQL builds to no avail. I googled it but surprisingly i found no hit at all. Anyone knows anything about this stored procedure please do drop me a line.

Option # 2. Use SSDT to upgrade rdls

Since I needed to upgrade the report server project from SSRS 2008 BIDS to SSRS 2016 SSDT I took this approach. It was also very straight forward. In the SSDT environment I added all report rdls by dragging and dropping them to the solution explorer pane. I then went through each report definition to straighten out data source and data set. Saved them all followed by a deployment. The transition was smooth and transparent.

Option # 3. Use rss utility to copy report items

Using the built-in rss utility to copy rdls, data sources, datasets, etc is a viable solution. Had method 2# not worked out I would have dived into this remedy.

Option # 4. Use Third party deployment/upgrade tools

Never looked into this but I believe there're some tools out there for this purpose.